In reaction to criticism around the use of Messenger in some countries worldwide, particularly Myanmar, Facebook has introduced new tools that it allow users of the app to report conversations that violate its community standards.

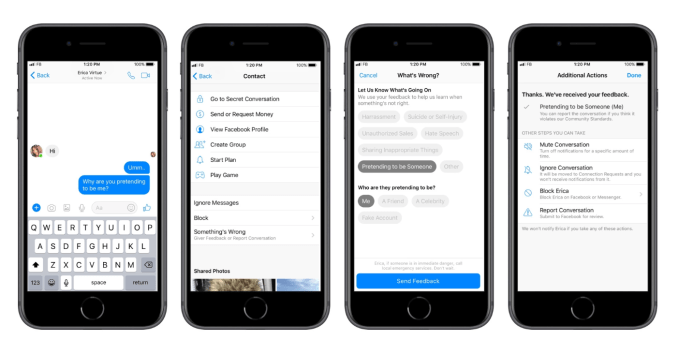

A new tab inside the Messenger app lets users flag messages under a range of categories that include harassment, hate speech and suicide. The claim is then escalated for review, Facebook said, after which it can be addressed. Previously, Messenger users could only flag inappropriate content via the web-based app or Facebook itself, that’s clearly insufficient for a service with over a billion users, many of whom are mobile-only.

Facebook said the review team covers 50 languages. It has been widely criticised for its small team of Burmese language reviews, most of which is based in Ireland — with a six-hour time gap — although it has pledged to staff up on Burmese experts.

In April, six organizations teamed up to write a letter to Facebook CEO Mark Zuckerberg after he claimed in an interview that Facebook’s “systems” were able to detect and prevent hate speech in Myanmar, a country where racial tensions simmer and Facebook is considered de facto internet.

Zuckerberg’s claim was incorrect, and he referred an incident last September which saw chain letters on Messenger inflame tensions. Buddhist community figures received messages warning of a planned Muslim attack, while those in the Muslim community got messages claiming there was imminent violence planned by militant Buddhist groups.

Instead, local organizations stepped in to defuse the situation when they were made aware of it. Facebook’s AI or systems did nothing.

While Zuckerberg later apologized to the Myanmar-based organizations “for not being sufficiently clear about the important role that your organizations play in helping us understand and respond to Myanmar-related issues,” the group went on the offensive again stating that Facebook actions are “nowhere near enough to ensure that Myanmar users are provided with the same standards of care as users in the U.S. or Europe.”

The changes to Messenger are a start, but Facebook has a lot more to do if it is to live up to its responsibility in Myanmar, but also other countries such as Vietnam, Sri Lanka, India and beyond where there are concerns that its platforms are not adequately policed.

Indeed, a recent UN Fact-Finding Mission concluded that social media has played a “determining role” in the Myanmar crisis, with Facebook identified as the chief actor. The issue was also raised in a Senate hearing with Zuckerberg last month.

from TechCrunch https://ift.tt/2Ko2yn6

via IFTTT

No comments:

Post a Comment